From time to time we all fall headlong into working environments conducive for experimentation. For us at L2D, that “experimental” environment has most recently been catalyzed by working in several projects that required testing Motion Capture and Photogrammetry-related tracking hardware.

From among the new hardware in our toy box, the one device that has most easily impressed us with its flexibility and ease-of-use has to be the now-defunct Microsoft Kinect. Now before you slam us for being late to the party (like, showing up the following morning kind of late) know that we’ve tinkered with the peripheral for several years, but until recently haven’t had a practical application for it’s integration into any of our projects. We mistakenly viewed it as a limited, consumer-grade device capable of capturing real-time but ultimately limited-scope data.

The Blank Wall of Doom

It all started with a big, blank space on the most obvious wall in our office — just to the right of our main entrance. When building out our new office (as shown in the screenshot below), we designed this wall specifically to play host to a “showcase” interactive piece for incoming visitors. Yet, as the proverbial cobbler’s kids prance shoeless and carefree, the wall, our marquee wall, remained awkwardly blank for much of our first year in the space, with no plan to become otherwise.

You see, we didn’t want to simply create a piece of interactive eye candy for the in-office visitor, but rather a interactive conduit that invited both in-office and remote visitors into an experiential exchange — ideally, in real-time. Inspired by the amazing earlier explorations by George MacKerron and Mr. Doob, we found that Kinect allowed us to accomplish just that feat.

The Gory Details

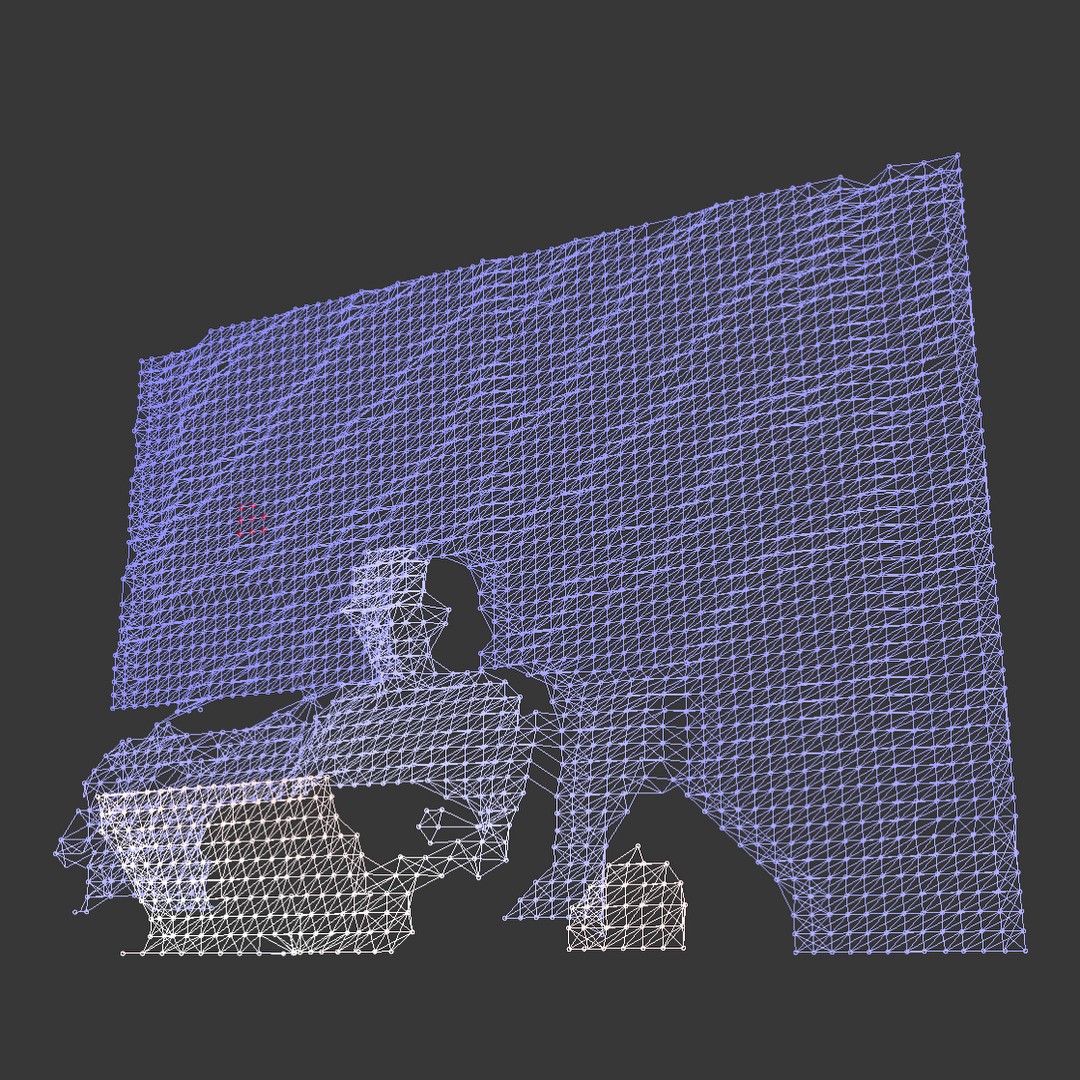

To realize our desired level of interaction we first connected a Kinect 360 (the original iteration of the device) to a Pine64 single-board computer running libfreenect (an open source catalog of drivers and libraries for the Kinect) in order to both pull and optimize the depth data stream in order to run locally at 60FPS for most users. Once established, we then used a WebSocket connection to dump the optimized stream data into the Pine’s Localhost, where a second WebSocket connection was actively poised to listen for and then broadcast the depth data out to the web via a custom, in-browser rendered three.js visualization running on a Node.js / Express website. That site, which is currently viewable here, is also making it’s debut on a shiny, new screen expertly positioned in the center of the aforementioned blank wall of doom! Existential designer crisis averted!

Yet, though we were very excited to have gotten this far with the experience, remaining isolated on a sub-domain didn’t seem quite accessible enough for us. So going one step further, we routed that same depth data to the header of our primary website thus inviting all of our desktop (and in the near future, mobile) users worldwide to interact with the experience.

A real-time, globally accessible 3D experience

So, practically speaking, what does this all mean? Well, platitudes and technical jargon aside, it means that we are now able to track the movements of people in front of the Kinect in our office, in 3D, and broadcast that data, as a real-time visualization, all over the world. It means that (at least during normal EST business hours) we can now invite users from around the globe into our office, and into an interactive exchange with our staff.

Knowing that the remote viewer interaction is still limited, our hope is to also add an additional function that will give visiting users the ability to not only control the angle at which they can view us interacting with the screen, but one where they can also contribute to the interaction as well. Will this look like messaging, painting, etc? We aren’t sure just yet. But we will be happy to invite you to the party when we do!

TL;DR

We fooled around with a Microsoft Kinect, gently encouraged it to give us optimized depth data, used a Pine64 to serve the data via WebSocket, and are rendering that data as a live three.js visualization on our website and in our office. It’s pretty awesome.